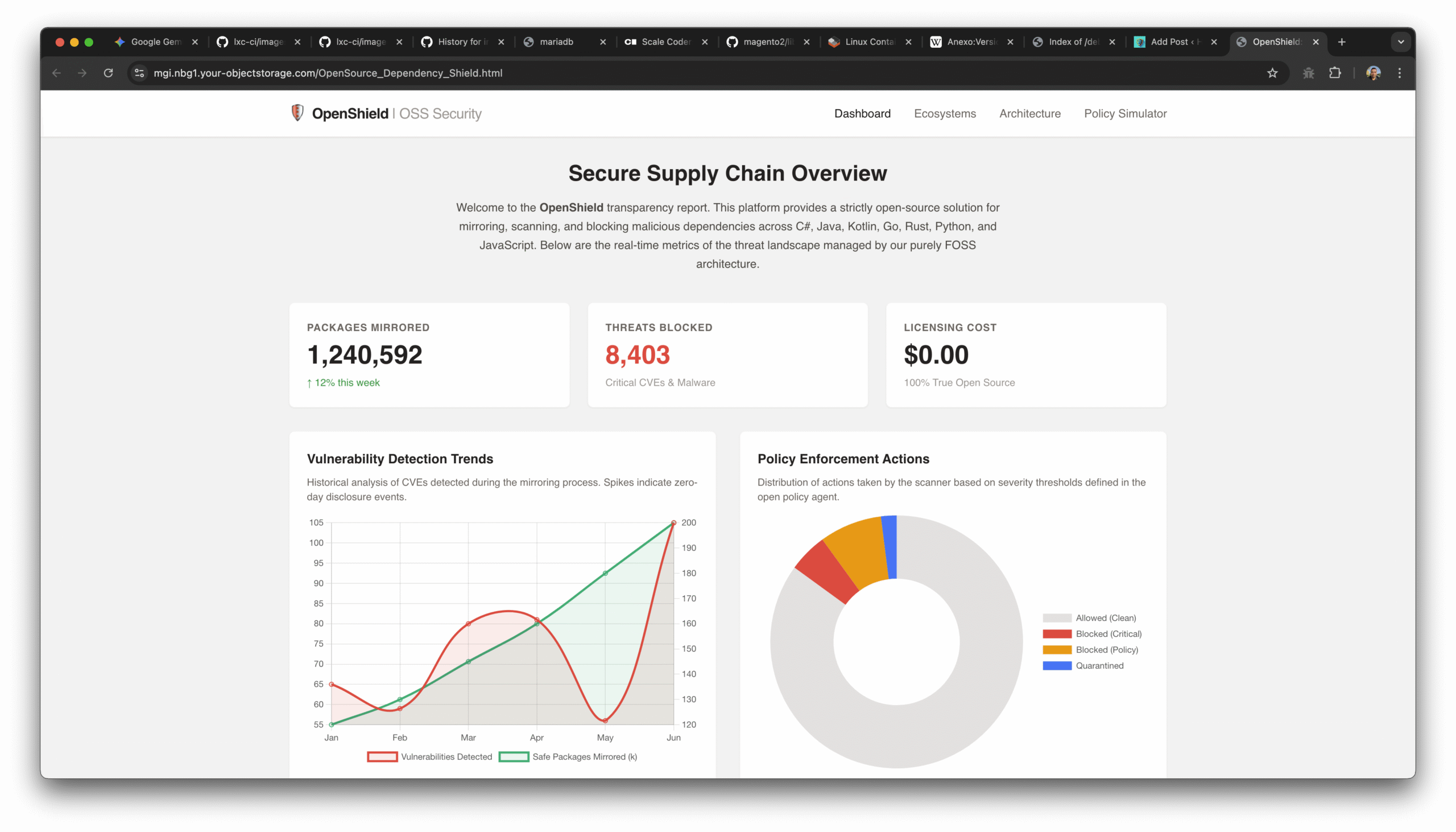

The Great Schism: An Exhaustive Analysis of Systemd’s Architectural Impact and the Resulting Controversy in the Linux Ecosystem

Executive Summary

The introduction of systemd as the default initialization system for the majority of Linux distributions marks one of the most tumultuous and significant architectural shifts in the history of the open-source operating system. While its proponents advocate for systemd as a necessary modernization that brings parallelization, unified management, and robust dependency handling to a fragmented ecosystem, a substantial and technically adept segment of the community remains vehemently opposed. This opposition is not merely a resistance to change but is rooted in deep philosophical divergences regarding the “Unix Way,” concerns over architectural centralization, and specific, verifiable technical failures.

This report provides a comprehensive, expert-level analysis of the systemd controversy. It dissects the philosophical arguments regarding modularity versus monolithic design, investigates the sociological friction caused by its rapid and sometimes aggressive adoption, and performs a forensic fact-check of the most serious technical allegations—ranging from data corruption and security vulnerabilities to hardware damage. By synthesizing historical context, technical documentation, bug reports, and developer discourse, this document aims to elucidate why systemd remains a polarizing force more than a decade after its inception.

1. The Pre-Systemd Landscape and the Motivation for Change

To fully understand the ferocity of the backlash against systemd, one must first understand the environment it replaced and the specific technical problems it sought to solve. The transition was not arbitrary; it was a response to the changing nature of computing hardware and the limitations of the aging SysVinit architecture.

1.1 The Legacy of SysVinit

For decades, the Linux boot process was dominated by SysVinit, a system inherited from AT&T’s UNIX System V. The design of SysVinit was deceptively simple: the kernel started the init process (PID 1), which then ran a series of shell scripts located in directories like /etc/rc.d/. These scripts were executed sequentially, one after another, to start services such as networking, mounting filesystems, and launching daemons.1

While this system was transparent—administrators could easily read and modify the shell scripts—it suffered from significant inefficiencies in a modern context.

- Serial Execution: Because scripts ran one at a time, the CPU often sat idle waiting for I/O operations (like a disk mount) to complete before starting the next service. This resulted in slow boot times, particularly as hardware became more powerful and parallel.

- Fragile Dependency Handling: SysVinit relied on numbered ordering (e.g.,

S20apache,S50mysql) to manage dependencies. This was brittle; if a service required the network to be fully up, but the network script only brought up the interface without waiting for an IP address, the dependent service would fail. - Lack of Process Supervision: SysVinit started processes but did not supervise them. If a daemon crashed, SysVinit would not automatically restart it. Administrators had to rely on external tools like

monitor complex script logic to handle failures.2

1.2 The Hardware Revolution and Dynamic Systems

By the late 2000s, the static nature of SysVinit clashed with the dynamic nature of modern hardware. The introduction of hot-pluggable devices (USB), transient network connections (Wi-Fi), and virtualization meant that the state of a system could change rapidly after boot. A strictly ordered, static boot sequence could not elegantly handle a network interface that appeared two minutes after boot, or a USB drive that needed to be mounted dynamically.

Competitors had already moved on. Apple introduced launchd in macOS, which used socket activation to start services on demand.2 Sun Microsystems introduced SMF (Service Management Facility) for Solaris, which offered robust dependency management and XML-based configuration. Linux distributions attempted to bridge the gap with Upstart (pioneered by Ubuntu), which was event-based but still relied heavily on backward-compatible shell scripts.

1.3 The Systemd Solution

Systemd, announced by Lennart Poettering and Kay Sievers, proposed a radical departure. It aggressively parallelized the boot process using “Socket Activation” and “Bus Activation” (via D-Bus). Instead of waiting for a service to start fully, systemd would create the socket the service listens on, buffer any incoming data, and start the service in the background. This allowed dependent services to start immediately without race conditions.2

However, to achieve this level of coordination, systemd required deep integration with the Linux kernel (cgroups, autofs) and a departure from shell scripts in favor of declarative “Unit Files.” It was this architectural pivot—moving away from imperative scripts to a declarative, binary-centric engine—that planted the seeds of the controversy.1

2. The Philosophical Schism: The Unix Philosophy vs. Integrated Management

The core of the resistance to systemd is rooted in a fundamental disagreement regarding the design philosophy of Unix-like operating systems. The critique is ideological, concerning the definitions of simplicity, modularity, and transparency that have governed Unix development since the 1970s.

2.1 Violation of the “Do One Thing and Do It Well” Principle

The Unix philosophy, canonized by Doug McIlroy and implemented in the design of the original Bell Labs Unix, emphasizes small, modular utilities that perform a single task efficiently. These tools utilize plain text streams as a universal interface, allowing administrators to chain them together (piping) to solve complex problems without creating complex software.5

Critics argue that systemd violates this tenet by acting as a “super-daemon” that absorbs functionality far beyond the scope of an init system. Traditionally, PID 1’s role is to bootstrap the user space and reap orphan processes. Systemd, however, has expanded to encompass a vast array of system responsibilities.

Table 1: Scope Expansion of Systemd

| Function | Traditional Tool/Method | Systemd Component |

| Init / Boot | SysVinit / Upstart | systemd (PID 1) |

| Logging | syslogd, rsyslog | systemd-journald |

| Network Config | ifupdown, dhclient | systemd-networkd |

| DNS Resolution | /etc/resolv.conf, bind, dnsmasq | systemd-resolved |

| Time Sync | ntpd, chrony | systemd-timesyncd |

| Login Management | ConsoleKit, acpid | systemd-logind |

| Device Management | udev (standalone) | udev (integrated) |

| Core Dumps | Kernel / filesystem | systemd-coredump |

This aggregation of functions into a single software suite—and largely into the PID 1 process or tightly coupled binaries—is viewed as a betrayal of the Unix concept of simplicity.5 Critics contend that attempting to control services, sockets, devices, mounts, and timers within a single daemon suite creates an opaque and unmanageable complexity.5 Patrick Volkerding, the creator of Slackware, famously criticized this architecture, stating that attempting to control all these aspects within one daemon “flies in the face of the Unix concept of doing one thing and doing it well”.5

2.2 The Text vs. Binary Debate

A central pillar of the Unix philosophy is the use of plain text for configuration and data streams. The maxim “Write programs to handle text streams, because that is a universal interface” is central to the transparency of Linux systems.6 Text files are universally readable, recoverable with standard tools (cat, grep, awk, sed), and resilient to partial corruption.

Systemd introduced journald, which stores system logs in a proprietary binary format. The arguments against this shift are practical and severe:

- Tool Dependency: Binary logs cannot be read without the specific

journalctlutility. If a system is compromised or broken such thatjournalctllibraries are non-functional (e.g., a library mismatch or corruption in/usr/lib), the logs become inaccessible, blinding the administrator during a crisis.7 - Corruption Risks: Text files are robust; if a sector on a hard drive is corrupted, one might lose a few lines of a log file, but the rest remains readable. Binary databases, however, generally possess a rigid structure where header corruption or index damage can render the entire file unreadable.9

- The “Greppability” Loss: While

journalctloffers powerful filtering capabilities (e.g., filtering by boot, priority, or time window), veteran administrators argue that losing the ability to use standard text processing pipelines on log files fundamentally breaks the Unix workflow. They argue thatgrepandawkare universal skills, whereasjournalctlswitches are tool-specific knowledge that may not transfer to other systems.7

2.3 Architectural Monolith vs. Modularity

The defense of systemd often relies on the distinction between a “monolith” (a single binary executable) and a “monorepo” (a single source repository producing multiple binaries). Proponents argue systemd is a collection of 69+ binaries, not a single monolithic block, and that one can disable components like networkd or resolved.13

However, opponents argue that “coupling” is the true metric of a monolith. If Component A cannot function without Component B, and Component B requires specific version alignment with Component C, they effectively form a monolith regardless of how many binaries are on the disk. The tight coupling between systemd’s components implies that one cannot easily swap out the logging daemon or the session manager for an alternative without breaking the rest of the system or requiring extensive patching (shims).7 This “feature creep” forces a vendor lock-in where adopting the init system necessitates adopting the entire ecosystem, reducing the administrator’s freedom to choose the best tool for each specific job.4

3. Technical Controversies and Fact-Checking

Beyond philosophy, specific technical incidents have fueled the anti-systemd sentiment. These incidents are often cited as proof of the software’s immaturity, architectural recklessness, or disregard for system safety. This section reconstructs and fact-checks the most high-profile allegations using the provided research data.

3.1 The “rm -rf /” Bricking of UEFI Laptops

The Allegation: Critics claim that systemd made it possible to permanently “brick” (render unusable) a laptop simply by running a standard file deletion command (rm -rf /), a vulnerability that should never exist at the OS level.

The Facts:

This incident is confirmed but nuanced. It involved an interaction between systemd’s default behavior, the Linux kernel, and non-compliant UEFI firmware on MSI laptops.15

- Mechanism: Systemd mounts the

efivarfs(EFI Variable File System) as read-write (RW) by default. This filesystem exposes the motherboard’s NVRAM (Non-Volatile RAM) to the operating system as files. This RW access is technically necessary for tools likesystemctl reboot --firmware-setup, which writes a variable to the NVRAM instructing the motherboard to boot into the BIOS setup on the next restart.18 - The Incident: A user, likely running a cleanup script or accidentally executing

rm -rf /as root, recursively deleted the contents of/sys/firmware/efi/efivars/. - The Hardware Failure: Standard UEFI specifications dictate that deleting variables should revert them to defaults or simply remove boot entries. However, specific MSI laptop firmwares were poorly implemented. When all EFI variables were deleted, the firmware entered an unrecoverable state. The machine would not POST (Power On Self Test), requiring a motherboard replacement or external hardware flashing tools to fix.15

- The Controversy: When the bug was reported to systemd (Issue #2402), the developers initially argued this was not a systemd bug but a kernel or firmware bug.15 They maintained that the root user is supposed to have full control over the hardware and that protecting the hardware from the root user is the kernel’s responsibility. Lennart Poettering argued that

efivarfsmust be writable for boot loaders to function and that “root can do anything really”.20 - Resolution: The community argued that mounting this sensitive interface as RW by default violated the principle of “fail-safe” design. Eventually, the Linux kernel introduced protections (the

immutablebit) to prevent the deletion of critical EFI variables even by root, effectively mitigating the risk.15 Systemd continues to mount it RW, but the kernel safeguards now prevent the catastrophic failure mode.

Verdict: The bricking was real. While the root cause was buggy firmware, systemd’s design decision to expose raw NVRAM access via the filesystem by default—without safeguards initially—was the vector that enabled the destruction.

3.2 Binary Log Corruption and Data Loss

The Allegation: Users claim that journald‘s binary logs corrupt easily during crashes, and unlike text logs, the data is totally unrecoverable.

The Facts:

Reports of journal corruption are widespread and substantiated by bug reports and user testimony.9

- Corruption Vector: When a system crashes (kernel panic or power loss), the binary files may not be closed properly. Upon reboot,

journalctl --verifyfrequently reports “File corruption detected” or “entry timestamp out of synchronization”.11 - Recoverability: Unlike text logs, where a crash might result in a few garbled lines at the end of the file (leaving the preceding lines readable), binary corruption in

journaldoften invalidates the file structure. Whilejournaldattempts to rotate the file and start a new one, the data leading up to the crash—often the critical data needed to diagnose why the crash happened—is frequently trapped in the unreadable binary blob.9 - Tooling Deficiency: There is no official “fsck” (file system check/repair) tool for journal files. The standard advice from systemd developers and distributions is to delete the corrupted files (

rm /var/log/journal/*), which guarantees data loss.9 - Btrfs Interaction: Corruption issues are exacerbated on Copy-on-Write (CoW) filesystems like Btrfs. If the “NoCOW” attribute (

chattr +C) is not properly set for the journal directory, the heavy fragmentation caused by random writes can lead to corruption and write errors during crashes or full-disk scenarios.23

Verdict: Confirmed. The binary format introduces a failure mode (total unreadability) that does not exist with plain text logs, and the lack of repair tools remains a significant pain point for administrators.

3.3 The “Debug Flag” Kernel Panic and Linus Torvalds’ Intervention

The Allegation: Systemd developers caused kernel panics by hijacking the kernel’s debug command-line parameter and refused to fix it, leading to a ban of a core systemd developer from the Linux kernel.

The Facts:

This is a confirmed historical event that highlighted the friction between systemd developers and the Linux kernel maintainers.

- The Issue: Systemd was programmed to parse the generic command line argument

debug. When a user enabled kernel debugging (by addingdebugto the boot line), systemd also enabled its own verbose debug logging. This flooded thedmesgbuffer and the console so aggressively that the system would often hang or fail to boot, effectively preventing the debugging it was meant to facilitate.25 - The Conflict: When the issue was reported (Bug #76935), core systemd developer Kay Sievers initially closed it as “NOTABUG,” arguing that generic terms do not belong to the kernel and that “generic terms are generic, not the first user owns them”.27

- The Escalation: Linus Torvalds, the creator and lead maintainer of the Linux kernel, intervened publicly. He criticized the attitude of the systemd developers for breaking existing kernel workflows and refusing to acknowledge regressions. Torvalds famously stated he was not willing to merge code from maintainers who “do not care about bugs and regressions and then forces people in other projects to fix their project”.25

- Resolution: The kernel developers had to patch the Linux kernel to hide the

debugstring from userspace specifically to protect users from systemd’s behavior.27

Verdict: Confirmed. This incident is frequently cited not just as a technical failure, but as evidence of a perceived arrogance in the systemd development culture regarding interoperability standards.

3.4 DNS Loops and systemd-resolved

The Allegation: systemd-resolved introduces unnecessary complexity to DNS handling, breaking local caching setups and causing DNS loops.

The Facts:

systemd-resolved has been a source of significant instability for users accustomed to the standard /etc/resolv.conf model.

- The Loop: A vulnerability (CVE-2017-9445) and architectural flaws allowed specifically crafted DNS responses or configurations to cause

systemd-resolvedto enter an infinite loop, consuming 100% CPU and denying service.28 - Compatibility:

systemd-resolvedattempts to manage/etc/resolv.confby making it a symlink to its own stub file. This breaks tools that expect to write to that file (like VPN clients, DHCP clients not aware of systemd, or container management tools).29 Users frequently report that disablingresolvedand returning to static DNS configuration resolves latency and resolution failures.29 - Cache Poisoning Risks: Security researchers have identified vulnerabilities where

systemd-resolvedcould be tricked into accepting malicious records into its cache, which it then serves to the local system.30 - Split-Horizon DNS: The complexity of

systemd-resolved‘s per-link DNS handling often complicates split-horizon DNS setups (where internal corporate domains resolve differently than external ones), leading to leaks of internal queries to public resolvers.29

Verdict: Validated. While systemd-resolved aims to standardize DNS across interfaces, its implementation has introduced new attack surfaces and reliability issues that simple text-based resolvers did not have.

4. The “Hard Dependency” and Ecosystem Fracture

One of the most intense sources of animosity toward systemd is the perception that it is “viral”—that it forces itself onto the ecosystem by making other software depend on it, thereby eliminating choice.

4.1 GNOME and the Logind Dependency

The GNOME desktop environment is the primary battleground for this argument. Historically, desktop environments were agnostic to the init system, relying on standard protocols like X11 and simple permissions.

- The Shift: Around GNOME 3.8 to 3.14, the project began to rely heavily on

systemd-logindfor session management, power management (suspend/resume), and seat management (handling multi-user switching). This move deprecated the previous standard,ConsoleKit.32 - The Impact on BSD and Non-Systemd Linux: Because

logindis a component of systemd and tightly coupled to its APIs (and Cgroups implementation), this effectively made systemd a hard dependency for running GNOME. This alienated BSD users (FreeBSD, OpenBSD) and Linux users who preferred other init systems (SysVinit, OpenRC).32 - The “Shim” Defense: GNOME developers argued that they rely on the interface (D-Bus APIs), not the implementation. This led to the creation of “shims” like

systemd-shimorelogind(extracted logind) to allow GNOME to run without the full systemd suite.32 However, maintaining these shims is a massive effort, and they often lag behind the upstream systemd APIs. GNOME developers have admitted that they do not test non-systemd code paths, leading to a buggy experience for those avoiding systemd.32

Analysis: The “dependency hell” is real. While technically possible to run GNOME without systemd via shims, the development momentum is entirely focused on systemd. This effectively treats non-systemd systems as second-class citizens, confirming the fears of the “Init Freedom” movement.37

4.2 The BSD Implications

The Unix ecosystem includes the BSD family (FreeBSD, OpenBSD, NetBSD), which does not use systemd and has no intention of adopting it due to its Linux-specific dependencies (cgroups, namespaces). The rapid adoption of systemd-specific APIs by upstream projects (like GNOME, udev, and freedesktop.org standards) creates a divergence. Software developed for “Linux” increasingly means “Linux with systemd,” breaking cross-platform compatibility that existed for decades.1

This forces BSD developers to spend significant resources writing compatibility layers (like BSD’s reimplementation of logind interfaces) rather than improving their own systems.39 This is viewed by the BSD community as hostile to the broader Unix ecosystem, effectively walling off Linux from its Unix roots.2

5. Security Architecture: Attack Surface and Vulnerabilities

Systemd’s massive scope has significant security implications. Centralizing so many critical functions into a single suite creates a large, complex attack surface running with high privileges.

5.1 PID 1 Complexity and Crash Risks

In Unix, PID 1 (the init process) is the one process that must never die. If PID 1 crashes, the kernel panics and the system halts immediately. Therefore, traditional wisdom dictates that PID 1 should be as simple as possible to minimize bugs.

- Traditional Init: SysVinit is a tiny, simple program. The code path is small, making bugs that cause crashes extremely rare.

- Systemd: Systemd (PID 1) is a complex event loop handling D-Bus messages, automounts, socket activation, unit logic, and more. It links against significant shared libraries (

libsystemd). - Vulnerability: CVE-2019-6454 demonstrated the danger of this complexity. It revealed that sending a specially crafted D-Bus message to PID 1 could cause a stack overflow in

systemd-journald(which PID 1 interacts with), leading to a crash of PID 1 and a kernel panic. This allows an unprivileged user to deny service to the entire machine.41 This proves the risk of overloading PID 1 with complex message-parsing functionality.

5.2 The Debug Shell Root Escalation

The Allegation: Systemd allows trivial root access via a debug shell.

The Facts:

Systemd includes a service debug-shell.service that spawns a root shell on TTY9 without a password. This is a feature designed for debugging boot problems where the system might hang before login services start.44

- The Risk: If an administrator enables this service and forgets to disable it—or if a misconfiguration enables it—any user with physical access (or VNC access) can switch to TTY9 (Ctrl+Alt+F9) and instantly gain root privileges.44

- Exploitation: CVE-2016-4484 described a vulnerability where holding the Enter key during boot (interacting with the encryption password prompt for LUKS) could cause the script to fail open and drop the user into a root shell in the initramfs environment if systemd was managing the boot process.46

5.3 Polkit and Privilege Escalation

Systemd relies heavily on polkit for privilege negotiation (allowing unprivileged users to reboot, suspend, or mount drives). This contrasts with the traditional sudo model where privileges are granted based on group membership and explicit commands.

- CVE-2021-3560: A vulnerability in

polkitallowed an unprivileged user to kill the authentication request at a precise moment. Due to a race condition in howpolkithandled the disconnected client, it would default to treating the request as successful, granting root privileges to the attacker.47 This highlights the complexity of the asynchronous, message-passing security model preferred by systemd compared to the traditional, synchronous models.

6. The Distro Rebellion: Movements for “Init Freedom”

The controversy was severe enough to cause schisms in major Linux distributions, leading to the creation of entirely new projects dedicated to avoiding systemd. These “forks” serve as a refuge for users who reject systemd’s hegemony.

6.1 Devuan: The Debian Fork

When the Debian Technical Committee voted to adopt systemd as the default init system in 2014, a group of developers, branding themselves the “Veteran Unix Admins,” forked the project to create Devuan.38

- Manifesto: Devuan’s core mission is “Init Freedom.” They argue that the user should have the choice of init system (SysVinit, OpenRC, Runit) and that Debian’s adoption of systemd locked users in due to deep package dependencies.38

- Technical Basis: Devuan maintains a modified package repository. They strip systemd dependencies from packages or provide patched versions. They often use

elogindto provide the D-Bus interfaces needed by desktop environments without running systemd as PID 1.48 - Significance: Devuan is not a fringe hobby project; it is a stable, long-term release that tracks Debian Stable, proving that a non-systemd Debian is technically viable, albeit with significant maintenance overhead.

6.2 Artix Linux: The Arch Fork

Arch Linux, known for its simplicity and user-centric customization, adopted systemd early (2012). This alienated users who felt systemd contradicted Arch’s “Keep It Simple, Stupid” (KISS) principle, which traditionally favored simple text files over complex abstractions.

- Response: Artix Linux was created to provide the Arch experience (rolling release, pacman package manager) using alternative init systems: OpenRC, Runit, s6, or dinit.37

- Rationale: Artix users argue that systemd is “bloated” and opaque. They prefer init systems that use readable shell scripts (like Runit or OpenRC) and do not have the massive attack surface of systemd. They actively maintain repositories that strip systemd dependencies from Arch packages.50

6.3 The Void Linux Approach

Void Linux is an independent distribution (not a fork) that explicitly rejects systemd in favor of Runit.

- Philosophy: Void proves that a modern, usable desktop and server OS can exist without systemd. It uses

runitfor supervision, which is praised for its speed, code minimalism, and reliability. Void users often cite the stability and understandability ofrunitas a direct counter-argument to systemd’s complexity.50

7. Sociological Factors: Governance and Attitude

A report on systemd would be incomplete without addressing the human element. The controversy is fueled as much by the behavior of the developers and the perceived shift in power dynamics as by the code itself.

7.1 The “Lennart Poettering” Factor

Lennart Poettering, the creator of systemd (and previously PulseAudio), is a polarizing figure in the Linux community. His communication style is often perceived as dismissive of criticism and traditional Unix values.

- “Not a Bug”: The “Debug Flag” incident (Section 3.3) is the archetypal example where valid concerns from the kernel community were initially dismissed as irrelevant because they didn’t fit the systemd worldview.25

- Disregard for POSIX: Poettering has famously stated that POSIX is not the gold standard and that Linux should chart its own course, effectively declaring that compatibility with other Unix systems is a secondary concern (or not a concern at all).1

- Toxicity: The debate became so vitriolic that Poettering received death threats and eventually left Red Hat for Microsoft in 2022. Conversely, proponents argue that the anti-systemd mob is abusive and resistant to necessary change, often attacking the person rather than the code.14

7.2 Red Hat’s Influence

Critics often view systemd as a “corporate takeover” of Linux by Red Hat (now IBM).

- The Argument: Because Red Hat employs the core systemd developers and controls the Fedora project (where systemd is incubated), they effectively dictate the direction of the entire Linux userspace. Independent distributions (like Arch or Debian) are forced to follow suit because the upstream software (GNOME, udev) is developed by the same corporate entity.53

- The “NSA Conspiracy”: Some fringe elements of the anti-systemd movement have claimed that the complexity and opacity of systemd make it a potential hiding place for backdoors (like those associated with the NSA), referencing Red Hat’s security relationships. It must be noted that there is no technical evidence to support this claim, but the existence of the theory highlights the profound lack of trust some users have in centralized, corporate-controlled software.54

8. Comparative Analysis: Systemd vs. Traditional Init

The following table summarizes the structural differences that drive the controversy, providing a clear comparison for technical decision-makers.

Table 2: Architectural Comparison

| Feature | Systemd | SysVinit / OpenRC / Runit | Controversy Point |

| Scope | Init, Logging, Network, Time, DNS, Login, Mounts | Init and Process Supervision only | Bloat/Monolith: Systemd does too much; breaks “Do one thing well.” |

| Configuration | Declarative Unit Files (.service) | Imperative Shell Scripts | Transparency: Shell scripts are debuggable code; Unit files are black boxes. |

| Logs | Binary (journald) | Plain Text (/var/log/syslog) | Recoverability: Binary logs corrupt easily and require tools to read. |

| Dependencies | Parallel, Socket-Activated | Serial (mostly), Script-based | Complexity: Parallelism is faster but harder to debug race conditions. |

| Inter-Process | D-Bus (Binary IPC) | Signals, Pipes, Sockets | Opacity: D-Bus traffic is harder to sniff/debug than text pipes. |

| PID 1 | Huge code base, complex logic | Tiny, simple code base | Security: PID 1 crash = Panic. Systemd increases the crash surface. |

| Philosophy | Integration & Unification | Modularity & Diversity | Ecosystem: Systemd encourages a monoculture; Init Freedom encourages diversity. |

9. Conclusion

The opposition to systemd is neither a monolithic block nor entirely based on nostalgia. It is a multifaceted resistance comprised of philosophical purists who view the abandonment of text streams as a regression, system administrators who have suffered actual data loss from journal corruption, security auditors concerned by the massive attack surface of PID 1, and non-Linux Unix communities being marginalized by the Linux-centric coupling of user-space software.

While systemd has undeniably succeeded in becoming the standard for mainstream Linux distributions due to its powerful feature set, parallelization capabilities, and standardization, the reasons for resisting it are grounded in verifiable technical trade-offs. The incidents of corrupted binary logs, the fragile handling of EFI variables that led to bricked hardware, and the architectural centralization of power substantiate the critics’ claims that systemd sacrifices robustness and simplicity for convenience and speed.

The existence and persistence of Devuan, Artix, and Void Linux demonstrate that a significant minority of the ecosystem considers these trade-offs unacceptable. As systemd continues to expand its scope into home directories (homed) and memory management (oomd), the friction between the “integrated” and “modular” camps is likely to persist, defining the fault lines of the Linux ecosystem for years to come.

Works cited

- systemd – Wikipedia, accessed on December 16, 2025, https://en.wikipedia.org/wiki/Systemd

- The Tragedy of systemd – FreeBSD Presentations and Papers, accessed on December 16, 2025, https://papers.freebsd.org/2018/bsdcan/rice-the_tragedy_of_systemd/

- The Tragedy of systemd – YouTube, accessed on December 16, 2025, https://www.youtube.com/watch?v=o_AIw9bGogo

- systemd has been a complete, utter, unmitigated success – Tyblog, accessed on December 16, 2025, https://blog.tjll.net/the-systemd-revolution-has-been-a-success/

- Unix philosophy – Wikipedia, accessed on December 16, 2025, https://en.wikipedia.org/wiki/Unix_philosophy

- Revisiting the Unix philosophy in 2018 : r/programming – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/programming/comments/9v8xrr/revisiting_the_unix_philosophy_in_2018/

- systemd really, really sucks, accessed on December 16, 2025, https://ser1.net/post/systemd-really,-really-sucks

- I really don’t understand the scenario where binary logging is a problem. journa… | Hacker News, accessed on December 16, 2025, https://news.ycombinator.com/item?id=27651678

- Systemd journal – a design problem apparent at system crash / System Administration / Arch Linux Forums, accessed on December 16, 2025, https://bbs.archlinux.org/viewtopic.php?id=169966

- systemd’s binary logs and corruption : r/linux – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/linux/comments/1y6q0l/systemds_binary_logs_and_corruption/

- Repairing CENTOS 7 Journal Corruption – Off Grid Engineering, accessed on December 16, 2025, https://off-grid-engineering.com/2019/09/10/repairing-centos-7-journal-corruption/

- Why do people see the binary log file format of systemd as bad? : r/linuxquestions – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/linuxquestions/comments/a0u8d2/why_do_people_see_the_binary_log_file_format_of/

- systemd: monolithic or not, accessed on December 16, 2025, https://www.linuxquestions.org/questions/linux-general-1/systemd-monolithic-or-not-4175539816/

- What’s wrong with systemd? : r/linux – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/linux/comments/3u2ahq/whats_wrong_with_systemd/

- SystemD appreciation post/thread : r/archlinux – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/archlinux/comments/xr73b5/systemd_appreciation_postthread/

- systemd mounts EFI variables as rw by default, meaning you could brick your device with a simple rm -rf – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/sysadmin/comments/438yk0/systemd_mounts_efi_variables_as_rw_by_default/

- Mount efivarfs read-only · Issue #2402 · systemd/systemd – GitHub, accessed on December 16, 2025, https://github.com/systemd/systemd/issues/2402

- Does systemd still have EFI variables as rw by default? : r/archlinux – Reddit, accessed on December 16, 2025, https://www.reddit.com/r/archlinux/comments/1jo6657/does_systemd_still_have_efi_variables_as_rw_by/

- It is *not* a systemd bug to mount efivars read/write. The efitools – Hacker News, accessed on December 16, 2025, https://news.ycombinator.com/item?id=11008880

- Systemd mounted efivarfs read-write, allowing motherboard bricking via ‘rm’ | Hacker News, accessed on December 16, 2025, https://news.ycombinator.com/item?id=10999335

- journalctl –verify reports corruption – Unix & Linux Stack Exchange, accessed on December 16, 2025, https://unix.stackexchange.com/questions/86206/journalctl-verify-reports-corrup